Update information September 2017: The guideline has been revised throughout to link to MHRA advice and NICE technology appraisals that have been completed since original publication. Minor updates since publication August 2019: Links to the MHRA safety advice on the risk of using retinoids in pregnancy have been updated to the June 2019 version.

This guidance was developed in accordance with the methods outlined in the NICE Guidelines Manual 2009272.

4.1. Developing the review questions and outcomes

Review questions were developed in a PICO framework (patient, intervention, comparison and outcome) for intervention or experimental reviews, and with a framework of population, index tests, reference standard and target condition for reviews of diagnostic test accuracy, and population, presence or absence of risk factors and list of ideal minimum confounding factors for reviews of prognostic factors. This was to guide the literature searching process and to facilitate the development of recommendations by the guideline development group (GDG). They were drafted by the NCGC technical team and refined and validated by the GDG. The questions were based on the key clinical areas identified in the scope (Appendix A). Further information on the outcome measures examined follows this section. For all interventions that were reviewed, absolute rates of efficacy and toxicity were also sought in order to provide information for people with psoriasis and their healthcare providers in line with the Patient Experience guideline262, which recommends that information is provided as a natural frequency using the same denominator and with intervention and control rates quoted separately. For this, efficacy data were based on the numbers achieving either PASI75 or clear/nearly clear on the PGA, whichever outcome was available or provided the largest sample size. Similarly, for toxicity, this was reported for withdrawals due to adverse events and the adverse events specified for that intervention.

Table

Patient satisfaction Concordance with treatment

4.2. Searching for evidence

4.2.1. Clinical literature search

Systematic literature searches were undertaken to identify evidence within published literature in order to answer the review questions as per The Guidelines Manual [2009]272. Clinical databases were searched using relevant medical subject headings, free-text terms and study type filters where appropriate. Studies published in languages other than English were not reviewed. Where possible, searches were restricted to articles published in English language. All searches were conducted on core databases, MEDLINE, Embase, Cinahl and The Cochrane Library. Additional subject specific databases were used for some questions: e.g. PsycInfo for patient views. All searches were updated on 8th March 2012. No papers after this date were considered.

Search strategies were checked by looking at reference lists of relevant key papers, checking search strategies in other systematic reviews and asking the GDG for known studies. The questions, the study types applied, the databases searched and the years covered can be found in Appendix D.

During the scoping stage, a topic-specific search was conducted for guidelines and reports on the websites listed below and on organisations relevant to the topic. Searching for grey literature or unpublished literature was not undertaken. All references sent by stakeholders were considered.

- Guidelines International Network database (www.g-i-n.net)

- National Guideline Clearing House (www.guideline.gov/)

- National Institute for Health and Clinical Excellence (NICE) (www.nice.org.uk)

- National Institutes of Health Consensus Development Program (consensus.nih.gov/)

- National Library for Health (www.library.nhs.uk/)

4.2.1.1. Call for evidence

The GDG decided to initiate a ‘call for evidence’ for comparative data to address the question of whether biologics are safe and effective in people with chronic plaque psoriasis who have previously received another biological agent. The GDG believed that important evidence existed that would not be identified by the standard searches. The NCGC contacted all registered stakeholders and asked them to submit any relevant published or unpublished evidence. Evidence was received and noted in the relevant chapter (Chapter 13).

4.2.2. Health economic literature search

Systematic literature searches were also undertaken to identify health economic evidence within published literature relevant to the review questions. The evidence was identified by conducting a broad search relating to psoriasis in the NHS economic evaluation database (NHS EED), the Health Economic Evaluations Database (HEED) and health technology assessment (HTA) databases with no date restrictions. Additionally, the search was run on MEDLINE and Embase, with a specific economic filter, from 2008, to ensure recent publications that had not yet been indexed by these databases were identified. Studies published in languages other than English were not reviewed. Where possible, searches were restricted to articles published in English language.

The search strategies for health economics are included in Appendix D. All searches were updated on 8th March 2012. No papers published after this date were considered.

4.3. Evidence of effectiveness

The Research Fellow:

- Identified potentially relevant studies for each review question from the relevant search results by reviewing titles and abstracts – full papers were then obtained.

- Reviewed full papers against pre-specified inclusion/exclusion criteria to identify studies that addressed the review question in the appropriate population and reported on outcomes of interest (review protocols are included in Appendix C.

- Critically appraised relevant studies using the appropriate checklist as specified in The Guidelines Manual272.

- Extracted key information about the study’s methods and results into evidence tables (evidence tables are included in Appendix H.

- Generated summaries of the evidence by outcome (included in the relevant chapter write-ups):

- Randomised studies: meta analysed, where appropriate and reported in GRADE profiles (for clinical studies) – see below for details

- Observational studies: data presented as a range of values in GRADE profiles

- Diagnostic studies: data presented as a range of values in adapted GRADE profiles and a narrative summary is provided

- Prognostic studies: data presented as a range of values in summary tables, with matrices for study quality

4.3.1. Inclusion/exclusion

See the review protocols in Appendix C for full details. The GDG were consulted about any uncertainty regarding the inclusion/exclusion of selected studies. Note that this guideline did not consider the management of psoriatic arthritis; therefore, studies that were primarily designed to investigate psoriatic arthritis rather than psoriasis affecting the skin were excluded. This was defined as studies primarily designed to treat the joint rather than the skin component of the disease and in a rheumatology rather than dermatology setting. However, studies were not excluded on the basis of the proportion of participants with PsA alone.

The GDG agreed that in most situations it would be reasonable to extrapolate data from adult populations to children when there was no or little data. Therefore, the GDG agreed to base treatment recommendations on RCTs with extrapolation to children if no separate paediatric evidence was found. Any exceptions to this principle will be noted in the LETR tables of the relevant review questions. Note that only two studies62,295 that specifically addressed psoriasis in children were identified and included in the guideline.

Regarding the different phenotypes of psoriasis, unless otherwise stated, data were sought for all types of psoriasis and reported separately if available. Plaque psoriasis is the most common form of the condition (90% of patients) and is usually the type referred to by both healthcare professionals and patients when using the term ‘psoriasis’. Other types of psoriasis include guttate psoriasis, pustular psoriasis which includes generalised pustular psoriasis and localised forms (ie: palmoplantar pustulosis and acrodermatitis continua of Halopeau) and nail psoriasis. Unless stipulated otherwise, the term psoriasis refers to plaque psoriasis in this guideline; where recommendations relate to types of psoriasis other than chronic plaque disease, the subtype of psoriasis is stated in the recommendation. Psoriasis in all its forms can be modified by site. The phrase ‘difficult-to-treat sites’ encompasses the face, flexures, genitalia, scalp, palms and soles. Psoriasis at these sites is especially high impact and/or may result in functional impairment, require particular care when prescribing topical therapy and may be very resistant to treatment.

4.3.2. Methods of combining clinical studies

Data synthesis for intervention reviews

Where possible, meta-analyses were conducted to combine the results of studies for each review question using Cochrane Review Manager (RevMan5) software. Fixed-effects (Mantel-Haenszel) techniques were used to calculate risk ratios (relative risk) for the binary outcomes: clear/nearly clear or marked improvement, PASI90, PASI75, relapse, withdrawal due to toxicity, withdrawal due to lack of efficacy, skin atrophy, burn, cataracts, severe adverse events, concordance with treatment and service use. The continuous outcomes: change in PASI, change in DLQI, duration of remission, number of UV treatments, time (or number of treatments) to remission, change in Hospital Anxiety and Depression Scale (HADS)/Beck Depression Inventory (BDI)/Speilberger State Trait Anxiety Inventory (STAI), change in Psoriasis Life Stress Inventory (PLSI), change in Psoriasis Disability Index (PDI), change in HADS, change in Psoriasis Life Stress Inventory (PLSI) were analysed using an inverse variance method for pooling weighted mean differences and where the studies had different scales, standardised mean differences were used. Change scores were reported where available for continuous outcomes in preference to final values. However, if only final values were available, these were reported and meta-analysed with change scores. Where reported, time-to-event data were presented as a hazard ratio.

Statistical heterogeneity was assessed by considering the chi-squared test for significance at p<0.1 or an I-squared inconsistency statistic of >50% to indicate significant heterogeneity. Where significant heterogeneity was present, we carried out sensitivity analysis based on the risk of bias of the studies if there were differences in study limitations, with particular attention paid to allocation concealment, blinding and loss to follow-up (missing data). In cases when significant heterogeneity was not explained by the abovementioned sensitivity analyses, we carried out predefined subgroup analyses as specified in the review protocols.

Assessments of potential differences in effect between subgroups were based on the chi-squared tests for heterogeneity statistics between subgroups. If no sensitivity analysis was found to completely resolve statistical heterogeneity then a random effects (DerSimonian and Laird) model was employed to provide a more conservative estimate of the effect.

The means and standard deviations of continuous outcomes for each intervention group were required for meta-analysis. However, in cases where standard deviations were not reported, the standard error for the mean difference between groups was calculated if the p-values or 95% confidence intervals were reported and meta-analysis was undertaken with the mean difference and standard error using the generic inverse variance method in Cochrane Review Manager (RevMan5) software. Where p values were reported as “less than”, a conservative approach was undertaken. For example, if p value was reported as “p ≤ 0.001”, the calculations for standard deviations would be based on a p value of 0.001. If these statistical measures were not available then the available data were reported in a narrative style but not included in the meta-analysis.

For binary outcomes, absolute event rates were also calculated using the GRADEpro software using event rate in the control arm of the pooled results.

Network meta-analysis was conducted for the review questions on the topical therapies for chronic plaque psoriasis at the trunk and limbs and high impact/difficult-to-treat sites. This allowed indirect comparisons of all the drugs included in the review when no direct comparison was available.

A hierarchical Bayesian network meta-analysis (NMA) was performed using the software WinBUGS19. We used a multi-arm random effects model template from the University of Bristol website (https://www.bris.ac.uk/cobm/research/mpes/mtc.html). This model accounts for the correlation between arms in trials with any number of trial arms. The model used was a random effects logistic regression model, with parameters estimated by Markov chain Monte Carlo Simulation.

Networks of evidence were developed and analysed based on the following binary outcomes:

- Clear/nearly clear or marked improvement (at least 75% improvement) on Investigator’s assessment of overall global improvement (IAGI) or clear/nearly clear/minimal (not mild) on Physician’s Global Assessment (PGA)

- Clear/nearly clear or marked improvement (at least 75% improvement) on Patient’s assessment of overall global improvement (PAGI) or clear/nearly clear/minimal (not mild) on Patient’s Global Assessment

The odds ratios were calculated and converted into relative risks for comparison to the direct comparisons. The ranking of interventions was also calculated based on their relative risks compared to the control group. For details on the methods of these analyses, see Appendix K and Appendix L.

Data synthesis for prognostic factor reviews

Odds ratios, relative risks or hazard ratios, with their 95% confidence intervals, from multivariate analyses were extracted from the papers. Data were not combined in a meta-analysis for observational studies. Sensitivity analyses were carried out on the basis of study quality and results were reported as ranges.

Data synthesis for diagnostic test accuracy reviews

For diagnostic test accuracy studies, the following outcomes were reported: sensitivity, specificity, positive predictive value, negative predictive value, likelihood ratio and pre- and post-test probabilities. In cases where the outcomes were not reported, 2 by 2 tables were constructed from raw data to allow calculation of these accuracy measures. Where possible the results for sensitivity and specificity were presented using Cochrane Review Manager (RevMan5) software.

Data synthesis for diagnostic test validity and reliability review

For investigating test validity and reliability of scales recording the severity and impact of psoriasis, the following outcomes were reported: Convergent validity, discriminate validity, internal consistency, inter-rater reliability, intra-rater reliability, practicability and sensitivity to change. Appropriate statistics were reported for each of these outcomes with their 95% confidence intervals or standard deviations for mean values where possible: Pearson product-moment correlation coefficient, Spearman rank correlation coefficient, kappa statistics, intra-class correlation, internal consistency coefficients (Crohnbach’s alpha) and time to administer the test. Data were summarised across outcomes and comparisons in a tabular format and any heterogeneity was assessed.

4.3.3. Type of studies

For most intervention evidence reviews in this guideline, randomised controlled trials (RCTs) were included. Where the GDG believed RCT data would not be appropriate this is detailed in the protocols in Appendix C. RCTs were included as they are considered the most robust type of study design that could produce an unbiased estimate of the intervention effects.

For diagnostic evidence reviews, diagnostic cohorts and case controls studies were included and for prognostic reviews cohort studies were included.

4.3.4. Types of analysis

Estimates of effect from individual studies were based on a modified available case analysis (ACA) where possible or on an intention to treat (ITT) analysis if this was not possible.

ACA analysis is where only data that was available for participants at the follow-up point is analysed, without making any imputations for missing data. In the modification for binary outcomes, participants known to have dropped out due to lack of efficacy were included in the denominator for efficacy outcomes and those known to have dropped out due to adverse events were included in the numerator and denominator when analysing adverse events. This method was used rather than intention-to-treat analysis to avoid making assumptions about the participants for whom outcome data were not available, and rather assuming that those who drop out have the same event rate as those who continue. This also avoids incorrectly weighting studies in meta-analysis and overestimating the precision of the effect by using a denominator that does not reflect the true sample size with outcome data available. If there was a high drop-out rate for a study then a sensitivity analysis was performed to determine whether the effect was changed by using an intention-to-treat analysis. If this was the case both analyses would be presented.

ITT analysis is where all participants that were randomised are considered in the final analysis based on the intervention and control groups to which they were originally assigned. It was assumed that participants in the trials lost to follow-up did not experience the outcome of interest (categorical outcomes) and they would not considerably change the average scores of their assigned groups (for continuous outcomes). It is important to note that ITT analyses tend to bias the results towards no difference. ITT analysis is a conservative approach to analyse the data, and therefore the effect may be smaller than in reality.

4.3.5. Unit of analysis

This guideline includes RCTs with different units of analysis. Some studies randomised individual participants to the intervention (parallel or between-patient studies) while others randomised body halves to the intervention (within-patient studies, analogous to crossover trials).

It was recognised that data from within-patient trials should be adjusted for the correlation coefficient relating to the comparison of paired data. Therefore, if sufficient data were available, this was calculated and the standard error was adjusted accordingly.

Additionally, within- and between-patient data were pooled, accepting that this may result in underweighting of the within-patient studies; however, it is noted that this is a conservative estimate. Sensitivity analyses were undertaken to investigate whether the effect size varied consistently for within- and between-patient studies and there was no evidence that the size of effect varied in a systematic way.

4.3.6. Appraising the quality of evidence by outcomes

The evidence for outcomes from the included RCT and observational intervention studies were evaluated and presented using an adaptation of the ‘Grading of Recommendations Assessment, Development and Evaluation (GRADE) toolbox’ developed by the international GRADE working group (http://www.gradeworkinggroup.org/). The software (GRADEpro) developed by the GRADE working group was used to assess the quality of each outcome, taking into account individual study quality and the meta-analysis results. The summary of findings was presented as one table in the guideline (called clinical evidence profiles). This includes the details of the quality assessment pooled outcome data, and where appropriate, an absolute measure of intervention effect and the summary of quality of evidence for that outcome. In this table, the columns for intervention and control indicate the sum of the study arm sample sizes for continuous outcomes. For binary outcomes such as number of patients with an adverse event, the event rates (n/N across studies: sum of the number of patients with events divided by sum of number of patients) are shown with percentages. This is for information only and is not intended to show pooling (which was performed using a weighted meta-analysis as described above). Reporting or publication bias was only taken into consideration in the quality assessment and included in the Clinical Study Characteristics table if it was apparent.

Each outcome was examined separately for the quality elements listed and defined in Table 1 and each graded using the quality levels listed in Table 2. The main criteria considered in the rating of these elements are discussed below (see section 4.3.7 Grading the quality of clinical evidence). Footnotes were used to describe reasons for grading a quality element as having serious or very serious problems. The ratings for each component were summed to obtain an overall assessment for each outcome Table 3.

Table 1

Description of quality elements in GRADE for intervention studies.

Table 2

Levels of quality elements in GRADE.

Table 3

Overall quality of outcome evidence in GRADE.

The GRADE toolbox is currently designed only for randomised trials and observational intervention studies but we adapted the quality assessment elements and outcome presentation for diagnostic accuracy studies.

4.3.7. Grading the quality of clinical evidence

After results were pooled, the overall quality of evidence for each outcome was considered. The following procedure was adopted when using GRADE:

- A quality rating was assigned, based on the study design. RCTs start HIGH and observational studies as LOW.

- The rating was then downgraded for the specified criteria: Study limitations, inconsistency, indirectness, imprecision and reporting bias. These criteria are detailed below. Observational studies were upgraded if there was: a large magnitude of effect, dose-response gradient, and if all plausible confounding would reduce a demonstrated effect or suggest a spurious effect when results showed no effect. Each quality element considered to have “serious” or “very serious” risk of bias was rated down -1 or -2 points respectively.

- The downgraded/upgraded marks were then summed and the overall quality rating was revised. For example, all RCTs started as HIGH and the overall quality became MODERATE, LOW or VERY LOW if 1, 2 or 3 points were deducted respectively.

- The reasons or criteria used for downgrading were specified in the footnotes.

The details of criteria used for each of the main quality element are discussed further in the following sections 4.3.8 to 4.3.11.

4.3.8. Study limitations

The main limitations for randomised controlled trials are listed in Table 4.

Table 4

Study limitations of randomised controlled trials.

The GDG accepted that participant blinding in psychological or educational intervention studies was impossible. Nevertheless, open-label studies for cognitive behavioural therapy and self-management were downgraded to maintain a consistent approach in quality rating across the guideline and in recognition that some of the important outcomes considered were subjective or patient reported (patient satisfaction, reduced distress/anxiety/depression, improved quality of life (change in DLQI/PDI) and therefore highly subjected to bias in an open label setting.

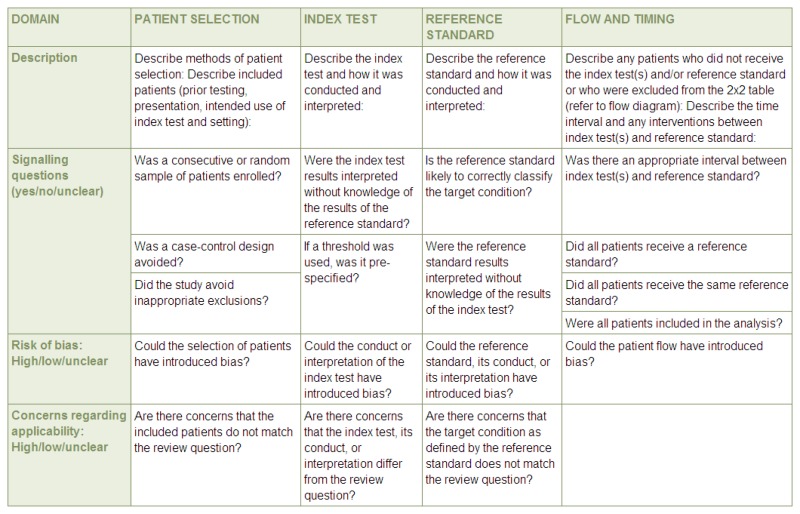

Evidence for diagnostic data was evaluated by study, using the Quality Assessment of Diagnostic Accuracy Studies version 2 (QUADAS-2) checklists. Risk of bias and applicability in primary diagnostic accuracy studies in QUADAS-2 consists of 4 domains (see Figure 1):

Figure 1

Summary of QUADAS-2 with list of signalling, risk of bias and applicability questions. Source: University of Bristol –QUADAS-2 website (http://www.bris.ac.uk/quadas/quadas-2)

- Patient selection

- Index test

- Reference standard

- Flow and timing

For prognostic studies, quality was assessed using a modified version of the Checklist for Prognostic Studies (NICE Guidelines Manual, 2009272). The quality rating was derived by assessing the risk of bias across 5 domains (selection bias; attrition bias; prognostic factor bias; outcome bias; and confounders and analysis bias, with outcome measurement and confounders being assessed per outcome). GRADE profiles were not used as the information regarding the quality of the evidence, which was not combined in a meta-analysis, was more clearly presented for ease of interpretation by using a quality matrix that clearly shows the limitations of each study.

For validity and reliability studies the quality was rated according to the following domains relevant for each outcome. Note that study size was not considered in the quality rating but was taken into account by the GDG when assessing the data. Applicability was considered for all outcomes in terms of how the tests were analysed (dichotomised/categorised appropriately or analysed as continuous variables) and who was applying the tests (experience and setting).

Validity

Construct validity and sensitivity to change:

- Time between measurements not too long

- Test order randomised

- Both tests conducted in each patient

- Two tests are conducted by the same raters, or raters randomised to tests and blinding of raters

Reliability

Inter-rater reliability:

- Randomisation of raters to patients (including order of raters)

- Blinding of raters results to results of other raters

- Not too long between tests

- Appropriate statistics – not correlation

Test-retest reliability and intra-rater reliability:

- The same measurement procedure

- The same observer and same measuring instrument

- Same environmental conditions

- Repetition over a short period of time

Internal consistency reliability:

- Same measurement procedure

- Same measuring instrument

- Same environmental conditions: (e.g. lighting) and same location

- Appropriate statistical analysis

4.3.9. Inconsistency

Inconsistency refers to an unexplained heterogeneity of results. When estimates of the treatment effect across studies differ widely (i.e. heterogeneity or variability in results), this suggests true differences in underlying treatment effect. When heterogeneity exists (Chi square p<0.1 or I- squared inconsistency statistic of >50%), but no plausible explanation can be found, the quality of evidence was downgraded by one or two levels, depending on the extent of uncertainty to the results contributed by the inconsistency in the results. In addition to the I- square and Chi square values, the decision for downgrading was also dependent on factors such as whether the intervention is associated with benefit in all other outcomes or whether the uncertainty about the magnitude of benefit (or harm) of the outcome showing heterogeneity would influence the overall judgment about net benefit or harm (across all outcomes).

If inconsistency could be explained based on pre-specified subgroup analysis, the GDG took this into account and considered whether to make separate recommendations based on the identified explanatory factors, i.e. population and intervention. Where subgroup analysis gives a plausible explanation of heterogeneity, the quality of evidence would not be downgraded.

For diagnostic, prognostic studies and validity and reliability studies where no meta-analysis could be performed inconsistency in the results was assessed by comparing the tabulated results across studies and identifying any conflicting findings. These were discussed by the GDG and recorded in the LETR tables.

4.3.10. Indirectness

Directness refers to the extent to which the populations, intervention, comparisons and outcome measures are similar to those defined in the inclusion criteria for the reviews. Indirectness is important when these differences are expected to contribute to a difference in effect size, or may affect the balance of harms and benefits considered for an intervention.

In this guideline, if the proportion with psoriatic arthritis was greater than 50% the evidence was considered to be indirect for the psoriasis population and would be downgraded.

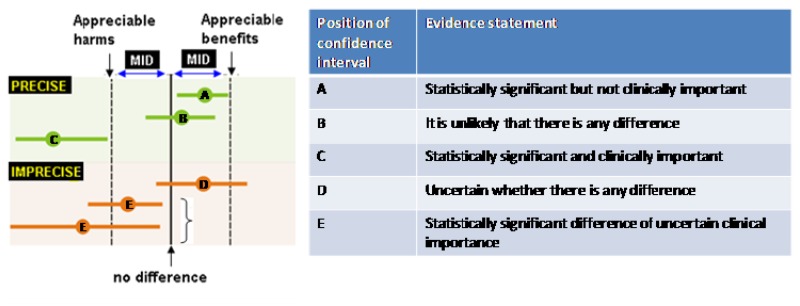

4.3.11. Imprecision

The minimal important difference (MID) in the outcome between the two groups was the main criteria considered.

The thresholds of important benefits or harms, or the MID, for an outcome are important considerations for determining whether there is a “clinically important” difference between intervention and control groups and in assessing imprecision. For continuous outcomes, the MID is defined as “the smallest difference in score in the outcome of interest that informed patients or informed proxies perceive as important, ether beneficial or harmful, and that would lead the patient or clinician to consider a change in the management”.126,162,357,358 An effect estimate larger than the MID is considered to be “clinically important”.

The difference between two interventions, as observed in the studies, was compared against the MID when considering whether the findings were of “clinical importance”; this is useful to guide decisions. For example, if the effect size was small (less than the MID), this finding suggests that there may not be enough difference to strongly recommend one intervention over the other based on that outcome.

The criteria applied for imprecision are based on the confidence intervals for pooled or the best estimate of effect as illustrated in Figure 2 and outlined in Table 5. Essentially, if the confidence interval crossed the MID threshold and the line of no effect there was uncertainty in the effect estimate as the range of values encompassed by the confidence interval was consistent with two decisions and the effect estimate was rated as imprecise.

Table 5

Criteria applied to determine precision for dichotomous and continuous outcomes.

The thresholds for the MIDs were based on the default GRADEpro values of 0.25 either side of the line of no effect for dichotomous outcomes. For continuous outcomes the default MID was calculated by multiplying 0.5 by the standard deviation (taken as the median of the baseline standard deviations for all studies reporting this outcome or, if baseline values were not reported for all studies reporting this outcome, the median control group rate).

For the key outcomes the GDG discussed on a case-by-case basis whether the estimates were precise, and GRADE ratings were altered accordingly when the default MIDs were not deemed to be appropriate.

For diagnostic reviews, the imprecision was based on the sensitivity, specificity PPV and NPV; however, if there was no majority in the assessment of imprecision across these statistics higher weighting was given to the outcomes deemed to be most important, for example in cases where it was most important to have a tests that are accurate for ruling out a diagnosis, the imprecision assessment would be based on sensitivity and NPV.

4.4. Evidence of cost-effectiveness

Evidence on cost-effectiveness related to the key clinical issues being addressed in the guideline was sought. The health economist:

- Undertook a systematic review of the economic literature

- Undertook new cost-effectiveness analysis in priority areas

4.4.1. Literature review

The Health Economist:

- Identified potentially relevant studies for each review question from the economic search results by reviewing titles and abstracts – full papers were then obtained.

- Reviewed full papers against pre-specified inclusion/exclusion criteria to identify relevant studies (see below for details).

- Critically appraised relevant studies using the economic evaluations checklist as specified in The Guidelines Manual272.

- Extracted key information about the study’s methods and results into evidence tables (evidence tables are included in Appendix I).

- Generated summaries of the evidence in NICE economic evidence profiles (included in the relevant chapter write-ups) – see below for details.

4.4.1.1. Inclusion/exclusion

Full economic evaluations (studies comparing costs and health consequences of alternative courses of action: cost–utility, cost-effectiveness, cost-benefit and cost-consequence analyses) and comparative costing studies that addressed the review question in the relevant population were considered potentially applicable as economic evidence.

Studies that only reported cost per hospital (not per patient), or only reported average cost effectiveness without disaggregated costs and effects, were excluded. Abstracts, posters, reviews, letters/editorials, foreign language publications and unpublished studies were excluded. Studies judged to have an applicability rating of ‘not applicable’ were excluded (this included studies that took the perspective of a non-OECD country).

Remaining studies were prioritised for inclusion based on their relative applicability to the development of this guideline and the study limitations. For example, if a high-quality, directly applicable UK analysis was available other less relevant studies may not have been included. Where exclusions occurred on this basis, this is noted in the relevant section.

For more details about the assessment of applicability and methodological quality see the economic evaluation checklist (The Guidelines Manual, Appendix H272 and the health economics research protocol in Appendix C.

When no relevant economic analysis was found from the economic literature review, relevant UK NHS unit costs related to the compared interventions were presented to the GDG to inform the possible economic implication of the recommendation to make.

4.4.1.2. NICE economic evidence profiles

The NICE economic evidence profile has been used to summarise cost and cost-effectiveness estimates. The economic evidence profile shows, for each economic study, an assessment of applicability and methodological quality, with footnotes indicating the reasons for the assessment. These assessments were made by the health economist using the economic evaluation checklist from The Guidelines Manual, Appendix H272. It also shows incremental costs, incremental outcomes (for example, QALYs) and the incremental cost-effectiveness ratio from the primary analysis, as well as information about the assessment of uncertainty in the analysis.

If a non-UK study was included in the profile, the results were converted into pounds sterling using the appropriate purchasing power parity296.

Table 6

Content of NICE economic profile.

Where economic studies compare multiple strategies, results are reported at the end of the relevant chapter in an alternative table summarising the study as a whole A comparison is ‘appropriate’ where an intervention is compared with the next most expensive non-dominated option – a clinical strategy is said to ‘dominate’ the alternatives when it is both more effective and less costly. Footnotes indicate if a comparison was ‘inappropriate’ in the analysis.

4.4.2. Undertaking new health economic analysis

As well as reviewing the published economic literature for each review question, as described above, new economic analysis was undertaken by the Health Economist in priority areas. Priority areas for new health economic analysis were agreed by the GDG after formation of the review questions and consideration of the available health economic evidence.

Additional data for the analysis was identified as required through additional literature searches undertaken by the Health Economist, and discussion with the GDG. Model structure, inputs and assumptions were explained to and agreed by the GDG members during meetings, and they commented on subsequent revisions.

See Appendices M, N and O for details of the health economic analyses undertaken for the guideline.

4.4.3. Cost-effectiveness criteria

NICE’s report ‘Social value judgements: principles for the development of NICE guidance’ sets out the principles that GDGs should consider when judging whether an intervention offers good value for money 271,272.

In general, an intervention was considered to be cost effective if either of the following criteria applied (given that the estimate was considered plausible):

- The intervention dominated other relevant strategies (that is, it was both less costly in terms of resource use and more clinically effective compared with all the other relevant alternative strategies), or

- The intervention cost less than £20,000 per quality-adjusted life-year (QALY) gained compared with the next best strategy.

If the GDG recommended an intervention that was estimated to cost more than £20,000 per QALY gained, or did not recommend one that was estimated to cost less than £20,000 per QALY gained, the reasons for this decision are discussed explicitly in the ‘from evidence to recommendations’ section of the relevant chapter with reference to issues regarding the plausibility of the estimate or to the factors set out in the ‘Social value judgements: principles for the development of NICE guidance’271.

When QALYs or life years gained are not used in the analysis, results are difficult to interpret unless one strategy dominates the others with respect to every relevant health outcome and cost.

4.5. Developing recommendations

Over the course of the guideline development process, the GDG was presented with:

- Evidence tables of the clinical and economic evidence reviewed from the literature. All evidence tables are in Appendix H and Appendix I.

- Summary of clinical and economic evidence and quality (as presented in chapters 6–14).

- Forest plots (Appendix J).

- A description of the methods and results of the cost-effectiveness analysis undertaken for the guideline (Appendix M, Appendix N and Appendix O).

Recommendations were drafted on the basis of the GDG interpretation of the available evidence, taking into account the balance of benefits, harms and costs. When clinical and economic evidence was of poor quality, conflicting or absent, the GDG drafted recommendations based on their expert opinion. The considerations for making consensus based recommendations include the balance between potential harms and benefits, economic or implications compared to the benefits, current practices, recommendations made in other relevant guidelines, patient preferences and equality issues. The consensus recommendations were reached through discussions by the GDG. The GDG may also consider whether the uncertainty is sufficient to justify delaying making a recommendation to await further research, taking into account the potential harm of failing to make a clear recommendation.

The main considerations specific to each recommendation are outlined in the Linking Evidence to Recommendation Section in each section.

4.5.1. Research recommendations

When areas were identified for which good evidence was lacking, the guideline development group considered making recommendations for future research. Decisions about inclusion were based on factors such as:

- the importance to patients or the population

- national priorities

- potential impact on the NHS and future NICE guidance

- ethical and technical feasibility.

4.5.2. Validation process

The guidance is subject to a six week public consultation and feedback as part of the quality assurance and peer review the document. All comments received from registered stakeholders are responded to in turn and posted on the NICE website when the pre-publication check of the full guideline occurs.

4.5.3. Updating the guideline

Following publication, and in accordance with the NICE guidelines manual, NICE will ask a National Collaborating Centre or the National Clinical Guideline Centre to advise NICE’s Guidance executive whether the evidence base has progressed significantly to alter the guideline recommendations and warrant an update.

4.5.4. Disclaimer

Healthcare providers need to use clinical judgement, knowledge and expertise when deciding whether it is appropriate to apply guidelines. The recommendations cited here are a guide and may not be appropriate for use in all situations. The decision to adopt any of the recommendations cited here must be made by the practitioners in light of individual patient circumstances, the wishes of the patient, clinical expertise and resources.

The National Clinical Guideline Centre disclaims any responsibility for damages arising out of the use or non-use of these guidelines and the literature used in support of these guidelines.

4.5.5. Funding

The National Clinical Guideline Centre was commissioned by the National Institute for Health and Clinical Excellence to undertake the work on this guideline.

Publication Details

Copyright

Apart from any fair dealing for the purposes of research or private study, criticism or review, as permitted under the Copyright, Designs and Patents Act, 1988, no part of this publication may be reproduced, stored or transmitted in any form or by any means, without the prior written permission of the publisher or, in the case of reprographic reproduction, in accordance with the terms of licences issued by the Copyright Licensing Agency in the UK. Enquiries concerning reproduction outside the terms stated here should be sent to the publisher at the UK address printed on this page.

The use of registered names, trademarks, etc. in this publication does not imply, even in the absence of a specific statement, that such names are exempt from the relevant laws and regulations and therefore for general use.

The rights of National Clinical Guideline Centre to be identified as Author of this work have been asserted by them in accordance with the Copyright, Designs and Patents Act, 1988.

Publisher

Royal College of Physicians (UK), London

NLM Citation

National Clinical Guideline Centre (UK). Psoriasis: Assessment and Management of Psoriasis. London: Royal College of Physicians (UK); 2012 Oct. (NICE Clinical Guidelines, No. 153.) 4, Methods.