NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

Madame Curie Bioscience Database [Internet]. Austin (TX): Landes Bioscience; 2000-2013.

“All models are wrong—but some are useful.” (G.E.P. Box)

The Basic Idea

Traditionally, the design of novel drugs has essentially been a trial-and-error process despite the tremendous efforts devoted to it by pharmaceutical and academic research groups. It is estimated that only one in 5,000 compounds investigated in preclinical discovery research ever emerges as a clinical lead, and that about one in 10 drug candidates in development ever gets through the costly process of clinical trials. For each drug, the investment may be on the order of $600 million over 15 years from its first synthesis to FDA approval. In 2000, U.S. pharmaceutical companies spent more than $22 billion in research and development, which, after inflation adjustment, represents a four-fold increase from the corresponding figure some 20 years ago. In an attempt to counter these rapidly increasing costs associated with the discovery of new medicines, revolutionary advances in basic science and technology are reshaping the manner in which pharmaceutical research is conducted. For example, the use of DNA microarrays facilitates the identification of novel disease genes and also opens up other interesting opportunities in disease diagnosis, pharmacogenomics and toxicological research (toxicogenomics). The development of combinatorial chemistry and parallel synthesis methods has increased both the quantity and chemical diversity of potential leads against new targets. Our ability to discover useful leads has been greatly enhanced through astonishing advances in high-throughput screening (HTS) technologies. Through miniaturization and robotics, we now have the capacity to screen millions of compounds against therapeutic targets in very short period of time. Central to this new drug discovery paradigm is the rapid explosion of computational techniques that allow us to analyze vast amount of data, prioritize HTS hits and guide lead optimization. The advances and applications of computational methods in drug design are beginning to have a significant impact on the prosperity of the pharmaceutical industry.

Modern approaches to computer-aided molecular design fall into two general categories. The first includes structure-based methods which utilize the three-dimensional structure of the ligand-bound receptor. Many innovative algorithms have been developed and implemented to construct de novo ligands that fit the receptor binding-site in a complementary manner; some of these will be discussed in Chapter 5. The second approach includes ligand-based methods in which the physicochemical or structural properties of ligand molecules are characterized. A classic example of this concept is a quantitative structure-activity relationship (QSAR) model, which grants a theoretical ground for lead optimization.

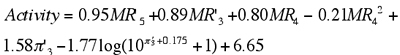

For the past four decades the development of QSAR has had a momentous impact upon medicinal chemistry. Hansch pioneered the field by demonstrating that the biological activities of drug molecules can be correlated by a function of their physicochemical parameters:

where f is a mathematical function and xi are the molecular descriptors providing information about the physicochemical or structural attributes of the molecules. The major challenges for QSAR practitioners are to find an appropriate set of molecular descriptors and a suitable function that can accurately elucidate the experimental data.

Development of QSAR Models

Ever since the seminal work of Hansch almost 40 years ago, QSAR research has evolved from the use of simple regression models with a few electronic or thermodynamics variables to an important discipline that is being applied to a wide range of problems.1–4 In the following Sections, we will outline the typical steps in the development of a QSAR model.

Descriptor Generation

The first step is the tabulation of experimental or computational physicochemical parameters which provide a description of similarities and differences of the compounds under investigation. The computation of descriptor values is generally straightforward because many commercial and academic computer-aided molecular design (CAMD) packages have been developed to handle this kind of calculation, often with great ease. However, it is more difficult to know a priori the type of descriptor which might be relevant to the biological activity of interest. In many cases, a standard set of descriptors chosen from experience may be used.5

Dimensionality of QSAR Descriptors

Molecular descriptors can vary greatly in their complexity. A simple example may be a structural key descriptor, which takes the form of a binary indicator variable that encodes the presence of certain substructure or functional features. Other descriptors, such as HOMO and LUMO energies, require semi-empirical or quantum mechanical calculations and are therefore more time-consuming to compute. Molecular descriptors are often categorized according to their dimensionality, which refers to the structural representation in which the descriptor values are derived.6 The 1D-descriptors are generally constitutive (e.g., molecular weight) descriptors. The 2D-descriptors include structural fragments fingerprints or molecular connectivity indices. It has been argued that structure key descriptors such as UNITY7 and Daylight8 implicitly account for many physicochemical parameters as well as atom-types and connectivity information.6 The molecular connectivity indices, which are based on graph theory concepts, can differentiate molecules according to their size, degree of branching, shape, and flexibility. Some of the most well-known topological descriptors are the Weiner index (W), the Zagreb index, Hosoya index (Z), Kier and Hall molecular connectivity index (χ), Kier's shape index (κ) , molecular flexibility index (), and Balaban indices (Jx and Jy). As implied by the name, 3D-descriptors are generated from a three-dimensional representation of molecules. Some examples include molecular volume, solvent-accessible surface area, molecular interaction fields, or spatial pharmacophores. With very few exceptions9–11 the descriptor values are computed from a static conformation, which is either a standard conformation with ideal geometries generated from programs such as CORINA12 or CONCORD,13 or a conformation that is fitted against a target X-ray structure or a pharmacophore.

Fragment-Based Physicochemical Descriptors

In addition to intrinsic dimensionality, molecular descriptors can be classified according to their physicochemical attributes. It is recognized that the dominant factors in receptor-drug binding are based on steric, electrostatic, and hydrophobic interactions. For many years medicinal chemists have attempted to model these principal forces of molecular recognition by using empirical physicochemical parameters, which ultimately led to the introduction of fragment constants in early QSAR studies. These descriptors are constants that account for the effect on a congeneric series of molecules of different substituents attached to the common core. The best-known electronic fragment constants are the Hammett σm and σp constants, denoting the electronic effect of parameters at meta and para positions (Note: the σ constant for ortho substituents is generally unreliable because of its steric interaction with the adjacent core group). Another common pair of electronic parameters is F and R, which are the inductive and the resonance components of the σp parameter, respectively. Perhaps the most widely used fragment constant in the field of QSAR is the hydrophobicity parameter, π. It measures the difference of the hydrophobic character of a given substituent relative to hydrogen. To represent the size of a substituent, molar refractivity (MR) is often used, though it has been shown that MR is not a pure bulk parameter since it also captures molecular polarizability of substituents.3

Novel QSAR Descriptors

Novel descriptors continue to appear in the literature; the more currently fashionable types encode combinations of steric, hydrophobic and electrostatic properties, not only for molecular fragments, but the whole molecule as well. For example, polar surface area contains information about both electronics and the size of a molecule and is commonly used in intestinal absorption modeling (see Chapter 4).9 Electrotopological state (E-state) indices capture both molecular connectivity and the electronic character of a molecule.14,15 The GRID16 and CoMFA17 programs take advantage of molecular interaction fields by using different probe types (steric, electrostatic or lipophilic) in a 3D lattice environment. Other variants of molecular field type, such as the molecular similarity-based CoMSIA,18 have also been reported in the literature. Most of the 3D-descriptors require a pre-aligned set of molecules. In cases where the exact molecular alignment is not obvious, one may consider the use of spatially invariant 3D descriptors (i.e., the descriptor values depends on conformation but not spatial orientation). A few innovative descriptors have been introduced for this purpose, including the use of autocorrelation vectors of molecular surface properties, including molecular surface-weighted holistic invariant molecular (MS-WHIM) descriptors MS-WHIM,19–22 and molecular quadrupolar moments.23 Another interesting descriptor, EVA,24,25 is based on normal coordinate frequency analysis and has been validated on a number of standard QSAR benchmark data sets recently.26–28 Burden's eigenvalue index29—originally developed for chemical indexing and later developed to become the BCUT metric30 for molecular diversity analysis—has also been found useful as a QSAR descriptor in emerging applications.31,32

Amino Acid Descriptors

Peptides are often used as probes of a binding site due to ease of synthesis and also to the prevalence of endogenous peptide ligands in nature. Consequently, significant effort has been expended on the development of robust parameters specifically designed to represent amino acids in QSAR applications. Examples are principal properties (z-scales), which are derived from a principal component analysis of 29 experimental and theoretical physicochemical parameters for the 20 naturally occurring amino acids (see Chapter 5),33–36 and the isotropic surface area (ISA) and electronic charge index.37 These latter indices are derived from 3D conformers of the side-chain units and are therefore more readily parameterized for unnatural amino acids. Both sets of parameters have been found to be useful for exploring peptide structure-activity relationships.

Feature Selection

Having generated a set of molecular descriptors, the next step is to apply a statistical or pattern recognition method to correlate these descriptors with the observed biological activities (see also the introduction to feature extraction given in Chapter 2). Partly due to the ease with which a great variety of theoretical descriptors may be generated, QSAR researchers are often confronted with high-dimensional data sets; i.e., the task in such a situation is to solve an underdetermined problem for which there are more variables (descriptors) than objects (compounds). The situation is even more complicated than it appears, because the underlying physicochemical attributes of the molecules that are correlated with their biological activities are often unknown, so that a priori feature selection is not feasible in most cases. Thus, the selection of the best variables for a QSAR model can be very challenging. To reduce the risk of chance correlation and overfitting of data, the entire data set is usually preprocessed using a filter to remove descriptors with either small variance or no unique information.38,39 A feature selection routine then operates on the reduced data set and identifies which of the descriptors have the most prominent influence on the activity to build a model. There are two major advantages of feature selection. First, it can help to define a model that can be interpreted. Second, the reduced model is often more predictive, partly because of the better signal-to-noise ratio which is a consequence of pruning the non-informative inputs.

In the past, variable or feature selection was made by a human expert who relied on experience and scientific intuition, or by a correlation analysis of the data set, or by application of statistical methods such as forward selection or backward elimination. However, when the dimensionality of the data is high and the interrelations between variables are convoluted, human judgment can be unreliable. Also, a simple forward or backward stepping algorithm fails to take into account information that involves the combined effect of several features, so that the optimal solution is not necessary obtained. This problem has been summarized by Kubinyi: “Selection of variables is time-consuming, difficult and, despite many different statistical criteria for the evaluation of the resulting models, a highly subjective and ambiguous procedure”.40 This suggests the need for a method which is applicable to complex multivariate data, easy to use and, of course, supplies a good solution to the problem.

Recent developments in computer science have allowed the creation of intelligent algorithms capable of finding the best, or nearly the best, solutions for such a combinatorial optimization problem (“complex adaptive systems”). A number of fully automated, objective feature selection methods have been introduced. In this section, we will review some of the most common selection strategies used in the current QSAR applications.

Forward Stepping and Backward Elimination

One of the simplest feature selection methods is a stepwise procedure. For forward stepping selection, new descriptors are added to the model one at a time until no more significant variables can be found. Most often, statistical significance is judged by the F-statistics. In backward elimination, the model begins with a full set of descriptors and less informative descriptors are pruned systematically. Both techniques are available as standard routines in many statistical or molecular modeling packages and are very fast to execute. The major shortcoming of this stepwise approach is that the algorithm fails to take into account information that involves any coupled (correlated, parallel) effect among multiple descriptors. Specifically, it is possible that a descriptor is eliminated in an earlier round because it may appear to be redundant at that stage; but later it could become the most relevant descriptor when others have been eliminated. This method is very sensitive to multiple local minima, and often finds a non-optimal solution—a problem which is related to the coupled descriptor effect just described.

Neural Network Pruning

The vast majority of ANN applications in QSAR concern the mapping of descriptor values to biological activities (i.e., parameter estimation of model). Wikel and Dow pioneered the (unconventional) use of ANN in variable selection for QSAR studies.41 This method is considered a “magnitude-based” method for which the inputs with the sensitivities of all variables are computed and the less sensitive variables (as reflected by smaller magnitude in their associated weights) are pruned. Other researchers have proposed “error-based” pruning methods for which the sensitivity of each input is probed by the corresponding change of the training error when its associated weights are eliminated.42

Simulated Annealing

Simulated annealing (SA) is a popular optimization method that is modeled from a physical annealing process.43 The principle of this method is similar to Monte Carlo simulations, except for the use of a cooling schedule for the temperature parameter. As the Boltzmann-type probability factor changes with the lowering of the temperature over the course of the simulation, solutions of lower quality are more readily accepted at the beginning of the optimization than during later stages. The result of SA often depends on the particular cooling schedule used during optimization. The most commonly used schedule is a geometric cooling scheme, where the temperature is decreased by a constant factor (typically in the range 0.90 to 0.99) at each annealing step. Other advanced cooling schedules have been proposed that help enhance the exploration of configuration space. They include the methods of geometric re-heating and adaptive cooling. The major advantages of SA are that the algorithm is easily understood, straightforward to implement, very robust, and generally applicable to almost all types of combinatorial optimization problems. In most cases, one can find good quality solutions to the problem at hand. However, as for all stochastic optimization methods, simulations that are initialized with different random seeds or cooling schedules can lead to very different outcomes. Compared to the forward stepping/backward elimination procedure, this algorithm requires substantially more computing resources.

Genetic Algorithm

The genetic algorithm (GA) idea mimics Nature to solve complex combinatorial optimization problems. GA is a stochastic optimization method that simulates evolution using a simplistic model of molecular genetics.44 The key aspect of a GA is that it investigates many solutions simultaneously, each of which explores different regions of configuration space. The details of this algorithm have been discussed in Chapter 1 and are not repeated here.

Tabu Search

Tabu search is an iterative procedure for solving combinatorial optimization problems. Compared to either SA or GA, the use of Tabu search related to computational molecular design in the literature has been relatively sparse. The basic concept of Tabu search is to explore the search space of all feasible solutions by a sequence of intelligent moves through incorporation of adaptive memory.45 To ensure that new regions of parameter space will be investigated, new moves in Tabu search are not chosen randomly. Instead, some moves that have been previously visited are classified as forbidden (hence Tabu or Taboo), or are severely penalized to avoid cyclic embarking or becoming trapped in a local minimum. Central to this paradigm is Tabu list management that concerns the maintenance and update of moves that are considered forbidden within a particular iteration of moves. In the context of descriptor selection, the Tabu memory may contain a list of individual descriptors or combinations thereof, which have been shown to be uninteresting during the previous rounds of model building.46

Exhaustive Enumeration

Although GAs or Tabu searching explore many possible solutions simultaneously, there is no guarantee that the best solution will ever emerge from simulations. In the presence of complex non-linear system behavior, the exhaustive enumeration of all possible sets of descriptors is the only method which ensures the discovery of the globally optimal solution, although such brute-force approach is often practically impossible. This is due to an exponential increase of the number of descriptor combinations that can be formed from a given number of descriptors (Note: The number of all possible subsets is 2N _ 1, where N is the number of descriptors considered). For a data set that contains 50 descriptors, the number of possibilities is already greater than 1015.

Other Feature Selection Methods

Many feature selection methods have appeared in the literature and it is beyond the scope of this text to provide a comprehensive review. Here other interesting approaches are discussed briefly. GOLPE (Generating Optimal Linear PLS Estimations) is a variable selection procedure to obtain the best predictive PLS (vide infra) models. In this approach, combinations of variables derived from a fractional factorial design strategy are used to run several PLS analyses, and the variables that contribute significantly to prediction accuracy are selected, while all others are discarded.47,48 Livingstone and coworkers recently developed a novel method called Unsupervised Forward Selection (UFS) for eliminating redundant variables.39 UFS begins with the two least correlated descriptors in the data set and iteratively adds more descriptors based on an orthogonality criterion. Variables that have squared multiple correlation coefficients greater than a user-defined threshold with those already chosen will be rejected. The resulting selection of descriptors has low redundancy and multi-colinearity.

Model Construction

Having selected relevant features, the final stage of QSAR model building is executed by a feature mapping procedure—also referred to as the parameter estimation problem. The goal is to formulate a mathematical relationship and to estimate the model parameters. The quality of the parameter set is usually judged by comparing the result of the fitted data to observed data.49 Quite often, feature selection and parameter estimation are performed simultaneously to produce a QSAR model.

Linear Methods

Multiple linear regression (MLR), or ordinary least squares (OLS), was the traditional method for QSAR applications in the past. The major advantage of this method is its computational simplicity, offering the possibility to easily interpret the resulting equation. However, this method becomes inapplicable as soon as the number of input variables equals or exceeds the number of observed objects. As a rule of thumb, the ratio of objects and variables should be at least five for MLR analysis; otherwise there is a corresponding large risk in chance correlation.50 A common way to reduce the number of inputs to MLR without explicit feature selection is through feature extraction by means of principal component regression (PCR). In this procedure, the complete set of input descriptors is transformed to its orthogonal principal components, relatively few of which may suffice to capture the essential variance of the original data. The principal components are then used as the input to a regression analysis.

Another very powerful multivariate statistical method for application to an underdetermined data set is partial least squares (PLS).51 Briefly, PLS attempts to identify a few latent structures, or linear combinations of descriptors, that best correlate with the observables. Cross-validation is employed to avoid overfitting of data. Unlike MLR, there is no restriction in PLS on the ratio between data objects and variables, and the PLS method can analyze several response variables simultaneously. In addition, PLS can deal with strongly collinear input data and tolerates some missing data values.

Non-Linear Methods

Traditionally, non-linear correlation in the data are explicitly dealt with by a predetermined functional transformation before entering a MLR. Unfortunately, the introduction of non-linear or cross-product terms in a regression equation often requires knowledge which is not available a priori. Moreover, it adds to the complexity of the problem and often leads to insignificant improvement in the resulting QSAR. To overcome this deficiency of linear regression, there is an increasing interest in techniques that are intrinsically non-linear. Some of them are mapping methods that attempt to preserve the original Euclidean distance matrix when high-dimensional data are projected to lower (typically two or three) dimensions. Examples of such are non-linear mapping (NLM), self-organizing map (SOM),52 or a ReNDeR-type neural network53 (see discussion in Chapter 2). However, although such maps do offer visual cues to structure-activity relationships, they rarely provide quantitative relationship between structural descriptors and activity. At the present time, artificial neural networks (ANN) are probably the most widely used non-linear methods in chemometric and QSAR applications (see Chapter 1). ANN are computer-based simulations which contain some elements that exist in living nervous systems. What makes these networks powerful is their potential for performance improvement over time as they acquire knowledge about a problem, and their ability to handle fuzzy real world data. With the presence of hidden layers, neural networks are able to implicitly perform non-linear mapping of the physicochemical parameters to the biological activities of the compounds. During the training phase, a network builds an internal feature model from data patterns presented to its input. New similar patterns will then be recognized; the network has the ability for generalization and, more importantly, it is able to make quantitative predictions for queries of similar nature.

Another emerging non-linear method is genetic programming (GP), whose initial use was to evolve computer programs.54 Recently, Kell and coworkers have published an exciting adaptation of GP to analyze mass spectral data.55 It is noteworthy to point out that in GP, the evolutionary algorithm is responsible not only for the selection of descriptors, but also for parameter estimation; i.e., the discovery of the appropriate mathematical transformation relating the descriptors and the response function. The functional tree implementation suggested by Kell operates on simple mathematical expressions that are readily manipulated by genetic operators. Ultimately, these simple mathematical functions are combined leading to non-linear multivariate regression. An advantage of GP over ANN is that the trees evolved are more interpretable and therefore provide valuable insights in the logistics of the decision-making process.56 Another notable difference between GP and conventional ANN is that the GP tree can be evolved to arbitrary complexity in order to solve a problem, although the use of evolutionary neural networks does allow for adaptation of neural network architecture (such as number of hidden nodes) during training.57 In both cases, the QSAR practitioner should be aware of the risk of data overfitting.

Recently, Tropsha and coworkers published a novel non-linear QSAR approach adapted from the k-nearest-neighbor principle (kNN-QSAR).58,59 Briefly, the activity of an unknown compound is predicted as the average activity of the k most similar compounds as measured by their Euclidean distances in multidimensional descriptor space. A simulated annealing procedure may be applied to the selection of an optimal set of descriptors based on predictive statistical parameters. This method is extremely fast and is also generally applicable to large data sets that may contain many structurally diverse compounds.

Model Validation

Model validation is a critical but often neglected component of QSAR development. In a recent review,60 Kövesdi and coworkers state that “[..] In many respects, a proper validation process is more important than a proper training. It is all too easy to get a very small error on the training set, due to the enormous fitting ability of the neural network, and then one may erroneously conclude the network would perform excellently”. The first benchmark of a QSAR model is usually to determine the accuracy of the fit to the training data (“re-classification”), most commonly reported by residual root-mean-squares (rms) error or the Pearson correlation coefficient (see the next Section for definitions). However, because QSAR models are often used for activity prediction of compounds not yet synthesized, the more important statistical measures are those giving an indication of their prediction accuracy.

The most popular procedure for estimation of the prediction accuracy is cross-validation, which includes techniques such as jack-knife, leave-one-out (LOO), leave-group-out (LGO) and bootstrap analysis. The first group of methods is based on data splitting, where the original data set is randomly divided into two subsets. The first is a set of training compounds used for exploration and model building, and the second is the so-called “validation set” for prediction and model validation. The leave-one-out procedure systematically removes one data point at a time from the training set, and on the basis of this reduced data set constructs a model that is subsequently used to predict the removed sample. This procedure is repeated for all data points, so that a complete set of predicted values can be obtained. It has been argued that the LOO procedure tends to overestimate the model “predictivity” and that resulting QSAR models are “over-optimistic”.61 It is worth noting that LOO cross-validation is often confused with jack-knifing. Technically, jack-knifing is used to estimate the bias of a statistic. A typical application of jack-knifing is to compute the statistical parameters of interest for each subset of data, and to compare the average of these subset statistics with the one that is obtained from the entire sample in order to estimate the bias of the latter. In LOO, the main focus is on the estimation of the generalization error based on the prediction of the leave-out samples.62 As an alternative to LOO, a LGO procedure can be applied, which typically sets aside between 5% to 20% of the entire data set as a validation subset. In the literature, this procedure is also known as “k-fold cross-validation”, indicating that the entire data is divided into k groups of approximately equal size. An added bonus of a LGO procedure is a vast reduction in computing resource relative to a standard LOO cross-validation.

Bootstrapping represents another type of re-sampling method that is distinct from data-splitting. It is a statistical simulation method which generates sample distributions from the original data set.63 The concept of bootstrapping is founded on the premise that the sample represents an estimate of the entire population, and that statistical inference can be drawn from a large number of pseudo-samples to estimate the bias, standard error, and confidence intervals of the parameter of interest. The pseudo- (or bootstrap-) samples are created from the original data set by sampling with replacement, where some objects may appear in multiple instances. The usual point of contention about the bootstrap procedure concerns the minimal number of samplings required for computing reliable statistics. An empirical rule given by Davison and Hinkley suggests that the number of bootstrap-samples should be at least 40 times the number of sample objects.64

Another popular means of statistical validation is through a randomization test. In this procedure, the output values (typically biological responses) of the compounds are shuffled randomly, and the scrambled data set is re-examined by the QSAR method against real (unscrambled) input descriptors to determine the correlation and predictivity of the resulting “model”. The entire procedure is repeated multiple times (typically 20–50 models) on many differently scrambled data sets. If there remains a strong correlation between the selected descriptors and the randomized response variables, then the significance of the proposed QSAR model is regarded as suspect.

Finally, the most stringent validation of a QSAR model is through the prediction of new test compounds. It is important that the compounds in an external test set must not be used in any manner during the model building process (e.g., optimizing network parameters or determining a stopping point for neural network training). Otherwise the introduction of bias from the test set compromises the validation process.

Model Quality

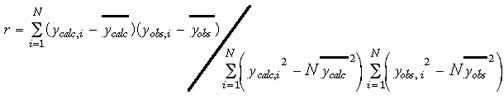

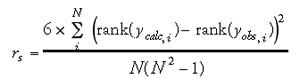

A variety of statistical parameters have been reported in the QSAR literature to reflect the quality of the model. These measures give indications as to how well the model fits existing data, i.e., they measure the explained variance of the target parameter y in the biological data. Some of the most common measures are listed below. The first is the Pearson product-moment correlation coefficient (r), which measures the linearity between two variables (Eq. 2). If two variables are perfectly linear with positive slope, then r = 1. However, the Pearson correlation coefficient can be highly influenced by outliers or a skewed data distribution. Under such circumstances, the Spearman rank correlation coefficient rS (Eq. 3) is a robust alternative to r when normality is unreasonable or outliers are present. The Spearman rank correlation coefficient is calculated from the ranks of scores, not the scores themselves. In other words, it is a measure of the strength of the linear relationship between population ranks, and is therefore less sensitive to the presence of outliers in the data. Furthermore, Spearman rank correlation measures the monotony of two random variables; if two variables are perfectly monotonically increasing, then rs = 1. It is noteworthy that if rS is noticeably greater than r, a transformation of data might lead to a stronger linear relationship. For classification, Matthews' correlation coefficient (c) is a popular statistical parameter to denote the quality of fit (Eq. 18 of chapter 2). This accounts not only for correct predictions, i.e., true positives (P) and true negatives (N), but also incorrect predictions (false positives/overprediction (O) and false negatives/underpredictions (U)). Similar to the other types of correlation coefficients, the Matthews' correlation coefficient ranges from 1 (perfect correlation) to _1 (perfect anti-correlation).

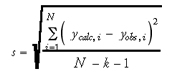

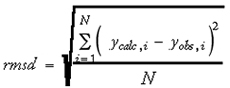

Other commonly used goodness-of-fit measures are the residual standard deviation (s) (Eq. 4) and the root-mean-square difference (rmsd) (Eq. 5) between the calculated versus observed values:

where N is the number of data objects and k is the number of terms used in the model. For these quantities, smaller values indicate a better fit of the data.

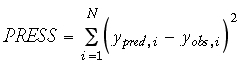

For cross-validation, PRESS (Eq. 6) and q2 (Eq. 7) have been suggested to provide good estimates of the real prediction error of a model:

It should be noted that, contrary to its name, q2 can be a negative value. Generally speaking, a q2 value of 0.5-0.6 is regarded as the minimum acceptance criteria for a reliable QSAR model.

Generally, the following characteristics are regarded as good traits of a robust and high-quality QSAR model:

- All descriptors used in the model are significant, and specifically, none of descriptors should be found to account for single peculiarities (e.g., unique compound-descriptor association).

- There should be no leverage or outlier compounds in the training set, otherwise the statistical parameters reported by the model may not be meaningful.

- The cross-validation performance should be significantly better than that of randomized tests but not very different from that of the training set and external test predictions.

Application of Adaptive Methods in QSAR

Variable Selection

The crux of data reduction is to select a subset of features retaining the maximal information content of the data.65 Feature extraction, exemplified by principal component analysis and discriminant analysis, transforms a set of features into a reduced representation that captures the major variance of data. Feature selection, on the other hand, attempts to identify a small subset of features from all available features. The following is a brief account of how artificial intelligence methods have been applied to feature selection in QSAR applications.

Artificial Neural Networks

The earliest application of ANN for variable selection in a QSAR application was reported by Wikel and Dow.41 After training a neural network by using all descriptors, those inputs having large weights between the input and the hidden nodes were selected for further analysis. The “magnitude-based” algorithm was tested on the widely-studied Selwood data set,66 which is comprised of a series of 31 antifilarial antimycin analogs, each parameterized by 53 physicochemical descriptors (Note: Livingstone, a co-author of the Selwood paper, has recently expressed the opinion that this particular data set should not be regarded as a “standard” but rather a “difficult” data set due to poor data distribution)39. In their pioneering work, Wikel and Dow employed a color map to indicate the magnitude of the weight values. This led to the identification of a set of three relevant descriptors. Used in a multiple linear regression they produced to a QSAR model that was marginally better (r = 0.77, rcv = 0.68) than the three-descriptor model originally published by Selwood (r = 0.74, rcv = 0.67).66 Despite this encouraging result, it was argued that this descriptor selection scheme seemed somewhat subjective. Specifically, an overtrained network (vide infra), which is characterized by large weight values of nearly all of the used descriptors, can result in poor discrimination between the relevant and irrelevant descriptors. Thus, it is important to adopt a robust early stopping criteria during neural network training in order to achieve correct pruning of unnecessary input descriptors.41,42

Recently, Tetko and coworkers benchmarked five neural network-based descriptor pruning methods in a series of three studies.42,67,68 Two magnitude- and three error-based methods were examined. The magnitude-based methods are similar in principle to the Wikel and Dow method, in which the pruning follows direct analysis of the magnitudes of the outgoing weights. The error-based method, on the other hand, detects sensitivity of the input by monitoring the change of the network error due to the elimination of some neuron weights associated with some inputs. Unlike the magnitude-based sensitivity method, the error-based pruning method assumes that the training error is at a minimum, so if an early stopping criteria is applied then the assumption will no longer be justified.42 Overall, the authors concluded that no significant advantage of one method over the others was evident from their analyzed data sets. In general, error-based sensitivity methods are computationally more demanding, particularly those which require higher derivatives of the error functions (e.g., optimal brain damage,69 or optimal brain surgeon algorithms70). All algorithms give similar results for both linear and non-linear simulated sets of data (artificial structured data sets) and are capable of identifying the least sensitive input descriptors. In all cases, predictivity of the ANN can be improved by the removal of redundant input descriptors. The five pruning algorithms were also tested with three real QSAR data sets, with the conclusion that the behavior of the different pruning methods seems to deviate more significantly in real QSAR modeling. However, the authors stated that it is very difficult to determine which pruning method would be universally applicable since the efficacy of each method is most likely data-dependent.

Genetic Algorithm

The earliest application of GAs in the role of descriptor-selection chemometric and QSAR applications was reported by Leardi and coworkers in 1992.71 Their initial test was performed on an artificial data set containing 16 objects and 11 descriptors. The simplicity of this test set allowed them to compare the result of a GA solution with that of an exhaustive enumeration of all possible subset selections. They observed that, on average, the globally optimal solution was found by the GA within one-quarter of the time required by the exhaustive search. This was in contrast with the result obtained by the stepwise regression method, which found a solution that was ranked only sixth overall in the list generated by the exhaustive search. After the initial validation, the GA-MLR method was applied to a real chemometric data set consisting of 41 samples and 69 descriptors. For this example, the stepwise regression approach yielded a 12-descriptor model with a cross-validated variance of 83%. The top models from five independent GA simulations yielded models with cross-validated variances of 89%, 85%, 81%, 91%, and 84%, demonstrating the stochastic nature of GA optimization. It is appropriate to perform multiple runs on the same input data when a GA is employed for feature selection. Besides, it is quite possible that the simplistic implementation of GA used in this exercise failed to escape from local optima. More advanced evolutionary algorithms, such as the “ring” or “island” models of parallel GAs, which partition the population into sub-populations and allow for a “migration” operator between sub-populations, may lead to improved convergence.56,72

Rogers and Hopfinger proposed a new GA-based method, termed genetic function approximation (GFA), for descriptor selection. A conventional GA, which contains crossover and mutation operators, is coupled with a MLR for parameter estimation. There are two principal enhancements in the GFA approach. The first is the introduction of a few non-linear basis functions such as splines and quadratic functions. The second is the incorporation of the lack-of-fit (LOF) error measure, which penalizes the use of large numbers of descriptors as a fitness criterion to safeguard against overfitting. With their GFA algorithm, Rogers and Hopfinger discovered a number of linear QSAR models for the Selwood antimycin data set which were significantly better than those obtained by Selwood and by Wikel and Dow.41,66 Interestingly, there seems to be only little overlap, other than the use of clogP—the sole descriptor encoding hydrophobicity—among the three studies. Similar to the finding by Leardi et al, the top 20 GFA models have a range of cross-validated r values from 0.85 to 0.81, again supporting the notion that many independent QSAR models can provide useful activity correlation on the same data. The use of multiple statistical models in the context of consensus scoring was also suggested by the authors, who observed that averaging the predictions of many top-rated models can lead to better predictions compared to any individual model.

At about the same time, two research groups published another GA variant also using the Selwood data set as a benchmark. The algorithm investigated termed MUSEUM (Mutation and Selection Uncover Models) by Kubinyi or Evolutionary Programming (EP) by Luke.40,73 The major difference of the algorithm compared to GFA is the absence of a crossover operator and its reliance solely on point mutation to generate new solutions. Independently, both groups discovered other excellent three-descriptor combinations that might have been found by GFA but were probably destroyed during the evolution because GFA did not employ elitism to preserve the best solutions for the next generation.

Very recently, Waller and Bradley proposed a novel variable selection technique called FRED (Fast Random Elimination of Descriptors) which contains elements from both evolutionary programming and Tabu search.46 In contrast to the other common genetic and evolutionary algorithms, the complete solutions (i.e., the descriptor combinations) are not propagated to the next generation, but rather, only those descriptors are retained which contribute positively to the genetic makeup of the fittest solutions. Descriptors that appear to be less useful are kept in a Tabu list, and are subsequently eliminated if they are not found to be beneficial during later iterations. Application of the FRED algorithm to the Selwood data yielded a final population of three-descriptor combinations that can be represented by 13 different input variables. This analysis was consistent with the results of the previously published methods. In particular, the selection of descriptors shared much similarity with the sets of descriptors that are chosen in the top GFA, MUSEUM, or EP models. The authors argued that it would be more difficult for poorer descriptors to be masked by some exceptionally good combination of descriptors and subsequently proliferate to the next generations, because only potentially good (single) descriptors are being passed to subsequent generations. It should be emphasized that the result of the FRED algorithm is to prune a potential list of descriptors by eliminating the less relevant descriptors; at the end of the calculation the best solutions are not necessarily guaranteed by the algorithm. Accordingly, one interesting utility of the FRED algorithm would be to treat it as a pre-filter for redundant descriptors so that an exhaustive enumeration could be applied.

All of the above variants of GAs are used in conjunction with MLR. A natural extension is to replace MLR with PLS, which is often regarded as a modern alternative and has also played a critical role in the development of the CoMFA methodology. Interestingly, relatively few researchers have investigated methods of variable selection for PLS analysis in the past (other than filtering out the variables with insignificant variance). One explanation might be that PLS has a high tolerance towards noisy data, and any number of input variables may be used. This attitude has changed somewhat over the past few years, as more people have begun to recognize the benefits of feature selection, and the use of hybrid approaches such as GA-PLS or GOLPE-PLS has become increasingly popular. Some examples of the application of GA-PLS include the QSAR studies performed by Funatsu and coworkers.74–78 In a QSAR study of 35 dihydropyridine derivatives,74 these researchers discovered that the cross-validation statistics (q2 = 0.69) of the GA-PLS model based on only six descriptors is superior to the full PLS model using all 12 descriptors (q2 = 0.62). Furthermore, elimination of the less relevant descriptors makes the QSAR model more interpretable, and the selected descriptors were then consistent with an earlier analysis of Gaudio et al, who had performed an extensive investigation on the same set of compounds.79 The usefulness of variable selection in PLS analysis was further demonstrated in a subsequent QSAR study of 57 benzodiazepines.75 Two GA-PLS models—based on 10 and 13 descriptors—yielded the essentially identical q2 value (0.84), and were again significantly better than the model derived from a PLS analysis using all 42 descriptors (q2 = 0.71). The apparent improvement in predictivity was verified by an external validation. Using D-optimal design, the data set was partitioned into a training set of 42 compounds and a test set of 15 compounds. The r2 values of the test predictions for the two GAPLS models were 0.70 and 0.74, respectively, which compares favorably to the solution of the full PLS model (r2 = 0.59). Overall, the results from this and other research groups underscore the value of descriptor selection in the context of QSAR modeling.80

Parameter Estimation

Artificial Neural Networks

The key strength of a neural network is its ability to allow for flexible mapping of the selected features by implicitly manipulating their functional dependence. Unlike multiple linear regression analysis, ANN handle both linear and non-linear relationships without adding complexity to the model. This capability partly offsets the longer computing time required by a neural network simulation because it avoids the need for separate examination of each possible non-linearity.81 In addition, it has been suggested that neural networks are parsimonious approximators of mathematical functions;82 that is to say, an ANN tends to fit the required function with fewer parameters than other methods, and is particularly effective if non-linearity is evident in the data set. Thus, this type of approach seems to be exceptionally well suited for the study of quantitative structure activity relationships.

The first applications of neural networks in the area of QSAR were published by Aoyama, Suzuki, and Ichikawa in 1990 with the promise that “the effective application of such neural networks may bring forth a breakthrough in the current state of QSAR analysis”.83,84 In their initial applications neural networks were used to perform tasks that were previously accomplished by multiple linear regression analysis. Three data sets were examined: a set of mitomycin analogues with anticarcinogenic activity; a series of antihypertensive arylacryloylpiperazines, and a large series of benzodiazepines used as tranquilizers. In these studies, substituent fragment descriptors, together with a few structural indicator variables, were used to encode molecular structures. In all cases the neural networks were able to deduce QSAR models that were superior to MLR fits. However, the use of an excessive number of connecting weights (in one example, 420 weights were used to fit 60 compounds) seemed questionable,85 partly because this contradicted a previously established guideline for MLR: the ratio of compounds versus parameters should be at least five.50 In addition, the authors included both linear and squared terms of molecular descriptors in the analysis, which seems unnecessary since an ANN ought to be able to uncover the appropriate functional transform of each descriptor.

This initial promise—as well as some obvious limitations—of the first ANN applications to the field of QSAR motivated many subsequent investigations aiming to gain a better understanding of this novel tool.85 These were exemplified by the outstanding work of Andrea and Kalayeh, who performed a comprehensive investigation of QSAR of a large data set of dihydrofolate reductase (DHFR) inhibitors.86 This data set had been previously analyzed by Silipo and Hansch using MLR,87 and contains 256 compounds that were characterized using seven substituents descriptors augmented by 6 indicator variables (Table 1). It is noteworthy that the indicator variables had been introduced by Silipo and Hansch to capture certain commonalities and structural features that could not be easily explained using standard fragment descriptors. It should be recognized that the net effect of some combinations of indicator variables and substituent parameters is to encode non-linear effects. For example, one of the terms in a regression equation may be a fragment-based descriptor (e.g., MR) that reflects how activity generally increases with the size of a given substituent. But at the same time, there may be an indicator variable present in the equation that penalizes the presence of an excessively large group at the same position (i.e., the binding pocket has a finite size). Thus, the net effect is that the relationship between substituent size and bioactivity is non-linear. With the exception of I1, which accounts for possible differences in DHFR active sites or assay conditions, all indicator variables were related to substituent positions already encoded by other fragment parameters. In the published MLR model, the indicator variables explained a significant amount of variance, as well as many outliers in the data set. For this data set, it was found that the r2 value of MLR decreased from 0.77 to 0.49, and the number of outliers (defined by the authors as those compounds with an absolute prediction error greater than 0.8) in the model increased from 20 to 61 when indicator variables (I2 to I6) were excluded from the analysis. However, because indicator variables provide little or no insight into the physicochemical factors that govern biological activity, their utility in de novo design of new analogues is limited. In this regard, it is encouraging to observe that it was not necessary to utilize these indicator variables in the ANN model. In fact, using only seven substituent descriptors and (the non-structural) I1, the ANN model yielded a r2 value of 0.85 and only 12 outliers. Thus, the neural network seemed to circumvent the need for indicator variables and was able to extract relevant information directly from the various hydrophobic, steric, and electronic parameters. In addition, Andrea and Kalayeh conducted a cross-validation experiment on a subset of 132 compounds (DHFR from Walker 256 leukemia tumors, i.e., compounds with I1 = 1) and obtained a r2 cv value of 0.79 for ANN. This result once again compared very favorably with the corresponding statistics from MLR, which yielded r2cv values of only 0.64 and 0.30 for models with and without the use of indictor variables, respectively. In addition, in contrast to the first ANN applications of Aoyama and coworkers, Andrea and Kalayeh clearly demonstrated that a neural network implicitly handles higher-order effects and also showed that it was not necessary to include non-linear transformation of the descriptors as inputs to the network.

Table 1

Descriptors used by Andrea and Kalayeh.

Andrea and Kalayeh also presented the first example of ANN overfitting in the area of QSAR. By demonstrating that the training error typically decreases with the number of hidden nodes while the test set error initially decreases, but will later increase, when an excessive number of hidden nodes is deployed. Furthermore, they considered test set statistics as a criteria to select an optimal neural network architecture (Note: For this reason their test set should not be regarded as an external set in the true sense because it was involved during model building). They also proposed a parameter, r, which is the ratio of the number of data points to the number of network weights, to help to define optimal network architecture. Though it was later shown that r by itself may not be sufficient to minimize the risk of overfitting,88 the general principles that were elucidated in this work are still valid and have probably saved many researchers from the perils of flawed QSAR models; i.e., a QSAR model may yield outstanding performance for the training set but no predictivity for new compounds.

Following the publications of Aoyama and co-workers83,84 and Andrea and Kalayeh86 in the early 1990s, the use of ANN in the area of structure-activity relationships has flourished. Figure 1 shows a histogram of the number of publications related to the application of ANN in QSAR analysis according to a bibliographic search.89 These reports include many correlative structure-activity studies using standard descriptors,88,90–114 or some more novel descriptors such as topological indices,88,115,116 molecular similarity matrices,117–121 quantum chemistry descriptors,122–125 autocorrelation vectors of surface properties,19,126 hydrogen bonding and lipophilicity properties,127,128 and thermodynamic properties based on ligand-receptor interactions.110 More recently, a number of novel ANN applications have reached beyond the premise of structure correlation with in vitro or in vivo potency and have ventured to solve some more challenging problems such as the prediction of pharmacokinetic properties,129–132 bioavailability,133 toxicity,106,125,134–141 carcinogenicity,142,143 prediction of mechanism of action,144 or even the formulation of a discrimination function to distinguish drug-like versus nondrug-like compounds145–147 (see Chapter 4). In addition to these quantitative studies, ANN has also been employed as a visualization tool to reveal qualitative trends in SAR analyses.53,117,118,144,148 The widespread use of ANN in the area of molecular design has stimulated the continuous influx of novel neural network technologies to the field, including the introduction of Bayesian neural networks,145,149–151 cascade-correlation learning architecture,67,152 evolutionary neural networks,57 polynomial neural network153 and intercommunication architecture.154 This cross-fertilization between artificial intelligence and pharmaceutical research is likely to continue as more robust ANN toolkits become commercially or freely available. Many excellent technical reviews have been written on the application of ANN in QSAR, and interested readers are encouraged to refer to them.60,81,82,85,155–164

Figure 1

Number of published reports on application of neural networks in the field of QSAR.

It is possible to summarize with a set of general guidelines for effective use of neural networks in QSAR modeling (and statistical modeling in general). First, the law of parsimony calls for the use of a small neural network, if possible. The number of adjustable parameters should be small with respect to the number of data points used for model construction, otherwise poor predictive behavior may result due to data overfitting. Neural network modeling can also benefit from the use of a large data set, which can facilitate location of a generalized solution from the underlying correlation in the data. In addition, the training patterns must be representative of the data to be predicted. This will lead to a more realistic predictive performance on the external test set with respect to the training result. It is advantageous to make use of efficient training algorithms; for example, those that make use of second derivatives of the error function for the weight update, which can give better convergence characteristics. Finally the input descriptors must obviously be relevant for the data modeling process. The golden rule of “garbage in, garbage out” can never be over-emphasized.165

Hybrid Methods

GA-NN

The natural evolution of the next generation of QSAR methods is to apply artificial intelligence methods in both descriptor selection and parameter estimation. An example of such hybrid approach was proposed by So and Karplus, who have combined GA with ANN for QSAR analysis.119,120,166,167 This method, called genetic neural network (GNN), was first applied to an examination of the Selwood data set.166 The major aim of this work was to use a GA to select a suitable set of descriptor for use in the development of a QSAR. The effectiveness of the GA was demonstrated by the ability to select an optimal set of descriptors, as compared to exhaustive enumeration, in the GNN models. It appears that the improvement of the GNN QSAR over other published models (Table 2) is due to the selection of non-linear descriptors which the ANN is able to assimilate.

Table 2

Comparison of linear regression and neural network QSAR models of the Selwood data set. All models are based on three molecular descriptors.

In their next study,167 an improvement to the core GNN simulator was made by replacing the problematic steepest descent training algorithm by a more robust scaled conjugate gradient optimizer,168 leading to substantial performance gains in both convergence and the speed of computation. To provide an extended test of the enhanced GNN simulator, it was applied to a set of 57 benzodiazepines which had been previously studied by Maddalena and Johnston using a backward elimination descriptor selection strategy and neural network training.99 It was found that the GNN protocol discovered a number of 6-descriptor QSAR models that are superior to the best (and arguably more complex) models reported by Maddalena and Johnston.

After appreciable success with standard fragment-based 2D descriptors, So and Karplus extended the use of GNN to the analysis of a molecular similarity matrix to derive 3D QSAR models.119,120 Molecular similarity is a measure based on the similarity between the physical or structural attributes of a set of molecules.169 This type of descriptor differs from conventional substituent parameters (e.g., p, s, and MR) in the sense that it does not encode physicochemical properties which are specific for molecular recognition. The similarity index is derived from numerical integration and normalization of the field values, and represents a global measure of the resemblance between a pair of molecules based on their spatial and/or electrostatic attributes. Thus, instead of a correlation between substituent properties and activities, a similarity-based QSAR method establishes an association between global properties and activity variation among a series of lead molecules. The implicit assumption is that globally similar compounds have similar activities.170 Figure 2 is a schematic diagram showing the different stages in the construction of a SMGNN (Similarity Matrix Genetic Neural Network) QSAR model. The initial validation was performed on a corticosteroid-binding globulin steroid data set,119 which had been extensively studied in the past by many novel 3D-QSAR methods.17,19,20,22,117,119,171–176 The first SMGNN application focused mainly on method validation, in particular the sensitivity and effect of parameters related to: (a) electrostatic potential calculations (type of atomic charges; truncation scheme for electrostatic potential, and dielectric constant); (b) similarity index (Carbó,177 Hodgkin,178 linear and exponential formulae179); (c) grid parameters (spacing, size, and location); and (d) number of similarity descriptors in QSAR model. The results of the sensitivity studies demonstrated that the SMGNN QSAR obtained was very robust with respect to variation in most of the user-defined electrostatic parameters. The fact that the various similarity indices are highly correlated also means that the choice of an index had negligible effect in determining the quality of the QSAR. The grid-related settings also had relatively little impact on the overall result. The key parameter seems to be the number of descriptors used in the model; it is important to have enough descriptors to characterize the data set but not so many that overfitting can arise. Overall, the SMGNN model is superior to those obtained from PLS and GA-MLR method; and also compares favorably with the results from other established 3D-QSAR methods. This approach was further validated using eight different data sets, with impressive results.120 The biological activities and physicochemical properties of a broad range of chemical classes were successfully correlated. One of the shortcomings of the SMGNN method is that interpretation of the QSAR model is difficult because the similarity index is not related to physicochemical attributes of the molecules. However, it is remarkable that the SMGNN QSAR model was consistent with all known SAR for CBG-steroid binding and, therefore, seems to handle the physical attributes leading to optimal binding in an implicit manner.

Figure 2

Schematic diagram for the construction of SMGNN 3D-QSAR models. Reprinted with permission from: So S-S, Karplus M. J Med Chem 1997; 40:4347–4359. ©1997 American Chemical Society.

Recently, Borowski and coworkers implemented and extended the SMGNN methodology to evaluate a set of 5-HT2A receptor antagonists in a 3D QSAR study.121 The data set included 26 2- and 4-(4-methylpiperazino)pyrimidines, as well as clozapine, which was used as a reference compound. Due to molecular symmetry the pyrimidines can have multiple mappings to the clozapine reference structure. Five alternative alignment schemes were suggested by the authors, and the q2 values of the models from each alignment were compared with the values derived from 30 randomly chosen alignment sets, which served as a baseline to test statistical significance. The alignment set with a particularly high predictivity was assumed to contain the correct superimposition of the bioactive conformations of these molecules. An interesting finding was that, although it was recognized that the piperazine nitrogen ought to be protonated upon binding to the 5-HT2A receptor, setting an explicit positive charge on the ligand was detrimental to the performance of the SMGNN QSAR model. This is because the charge has a pronounced effect in the electrostatic calculation, rendering the similarity indices discriminating. One suggestion from the authors is to consider only the neutral, deprotonated form during the similarity calculation. The best steric and electrostatic SMGNN models both contain five descriptors, and yield q2 values of 0.96 and 0.93, respectively. Both models are significantly better than random models with scrambled output values, which return q2 values of 0.28 ± 0.16 and 0.29 ± 0.14, respectively. In summary, the results of this independent study strongly support the use of the SMGNN methodology in 3D QSAR studies.

The research group of Peter Jurs is also very active in the development and application of GA-NN type hybrid methods in QSAR and QSPR studies.138 In their procedure, the full data set is usually divided into three parts. The majority (70–80%) of the compounds belongs to the training set (tset), and the reminder of compounds are usually evenly divided to give a cross-validation set (cvset) to guide model development, and an external prediction test (pset) to validate the newly developed QSAR models. Jurs has defined three types of statistical models derived from their multivariate data analysis:

- Type I model is a linear regression model whose selection of descriptors is based on a stochastic combinatorial optimization routine (e.g., SA or GA);

- Type II model is a non-linear ANN model that directly adopts the descriptors used in the Type I model;

- Type III model is a fully non-linear ANN model developed in conjugation with a SA or GA for descriptor selection.

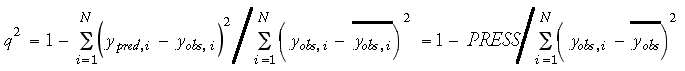

The quality of a model is based on the following fitness (or cost) function (Eq. 8):38

where the coefficient of 0.4 was determined empirically to yield models with enhanced external predictivity.

In a recent application this GA-NN method was used to study the QSAR of 157 compounds with inhibitory activity against acyl-CoA:cholesterol O-acyltransferase (ACAT), a biological target implicated in the reduction of triglyceride and cholesterol levels.38 Twenty-seven compounds were removed from the initial data set due to high experimental uncertainty of their IC50 values, and the remaining compounds were partitioned to obtain tset, cvset and pset with 106, 11, and 13 compounds, respectively. A large number of descriptors were generated using their in-house automated data analysis and pattern recognition toolkit (ADAPT) software package, and were pruned according to a minimal redundancy and variance criteria. The best Type I model is a nine-descriptor MLR that has a rmstset of 0.42 and rmspset of 0.43 log units. Using the same set of descriptors, they generated a Type II ANN-based model that has significantly lower rms errors of 0.36 and 0.34 log units for the tset and pset. Finally, to take full advantage of the non-linear modeling capability of ANN, they conducted a more comprehensive search using a combined GA-NN simulation. The top Type III model employed eight descriptors and yielded an rms error of 0.27 for both tset and pset. Four of the eight descriptors used in the Type III model are identical to those selected by the linear Type I model. It is suggested that unique descriptors in the Type III model provide relevant information and are also non-linear in nature.

The general applicability of the GA-NN approach in QSAR was further verified in another study where a large set of sodium ion-proton antiporter inhibitors were investigated.180 Following the established procedure, Kauffman and Jurs divided the 113 benzoylguandine derivatives into a 91-member tset, an 11-member cvset, and an 11-member pset. Using an SA feature selection algorithm, they searched for predictive models containing from 3 to 10 descriptors. The optimal Type I linear regression model used 5 descriptors and yielded a rms error of 0.47 and r2 = 0.46 for the tset. The predictive performance of the pset was, however, rather poor (rms = 0.55; r2 = 0.01), indicating a general deficiency of this linear model. The replacement of MLR by ANN in functional mapping led to moderate improvement. The corresponding Type II model reported a rms error of 0.36 and r2 of 0.68 for the tset, and significantly lower prediction errors for the pset compounds (rmspset = 0.42 and r2pset = 0.44). The greatest increase of accuracy was seen in the construction of the Type III model, where the rms error of the tset dropped to 0.28 and r2 increased to 0.81. The corresponding pset statistics were 0.38 and 0.44, respectively.

The authors also explored the consensus scoring concept proposed by Rogers and Hopfinger,181 and examined the effect of prediction averaging using a committee of five ANNs. They confirmed that the composite predictions were more reliable than those from individual predictors, largely because they make better use of the available information. The rms error of the prediction set for the consensus model was 0.30 compared to an error of 0.38 ± 0.09 from the five separate trials. This result is also consistent with an earlier GNN study on the Selwood data set, stating that averaging of the outputs of the top-ranking GNN models led to marginally better cross-validation statistics compared to the individual models.166

One major drawback of the QSAR model derived from the ADAPT descriptors concerns the ability to design novel analogues with desirable bioactivity. For example, the five descriptors selected by the GA in the non-linear Type III model were MDE-14, which is a topological descriptor encoding the distance (edges) between all primary and quaternary carbon atom pairs; GEOM-2, the second major geometric moment of the molecule; DIPO-0, the dipole moment; PNSA-3, a combined descriptor with atomic charge weighted partial negative surface area; and RNCS-1, the negatively charged surface area. Because these are whole-molecule descriptors, even a seemingly small substituent modification (e.g., changing methyl to hydroxy) can sometimes lead to significant changes of the entire set of descriptor values. Thus, even when an optimal set of descriptor values is known, it can still be a challenging task to engineer a molecule that fulfils the necessary conditions. One brute-force solution is to enumerate a massive virtual library and deploy the QSAR model as a filtering tool. Another possibility is to perform iterative structure optimization using the predicted activity as the cost function. The latter approach is the basis of many de novo design programs. For example, the EAinventor package provides an interface between a structure optimizer, with some embedded synthetic intelligence, and an user-supplied scoring function. This is a powerful combination which creates a synergy between synthetic consideration and targeted potency (see Chapter 5).

In the conclusion of a recent review article on neural networks,85 Manallack and Livingstone wrote:

“We feel that the combination of GAs and neural networks is the future for the [QSAR] method, which may also mean that these methods are not limited to simple structure-property relationships, but can extend to database searching, pharmacokinetic prediction, toxicity prediction, etc.”

Novel applications utilizing hybrid GA-NN approaches are beginning to appear in the literature, and will be discussed in detail in Chapter 4.

Comparison to Classical QSAR Methods

Chance Correlation, Overfitting, and Overtraining

In the examples presented in the previous Section, we have discussed the utility of GA for the selection of descriptors to be used in combination with multivariate statistical methods in QSAR applications. Although variable selection is appropriate for the typical size of a data set in conventional QSAR studies (i.e., 20–200 descriptors)—particularly if the initial pool of descriptors exceeds the number of data objects—selection may still carry a great risk of chance correlation.40 The ratio of the number of descriptors to the number of objects used in model building can be a useful parameter indicating the likelihood of chance correlation. As a general guideline, it has been suggested that a ratio of greater than five suggests that GA-optimized descriptor selection may produce unreliable statistical models.80 Obviously, other factors that are related to signal-to-noise, redundancies, and collinearity in the data can also be critical. To further reduce this risk, it is also recommended that randomization tests should be performed as an integral part of standard validation procedures in any application that involves descriptor selection. In addition, it may be beneficial to implement an early stopping point during GA evolution in order to prevent overfitting of data. Based on empirical observation, the fitness of the population usually increases very rapidly during the early phase, and then the improvement slowly levels off. The reason for this behavior is that the modeling of useful information in the data is usually made quite rapidly during the initial stage. Later, the GA begins to fit the noise or idiosyncrasies of the data to the model, sometimes using additional parameters. To determine an optimal stopping point for GA optimization, Leardi suggested a criterion that is based on the difference in the statistical fit between the real and the randomized data set.80 In this scheme, the evolution cycle that corresponds to the maximum difference between the two sets of statistics is considered an optimal termination point. Related to this concept, another intriguing idea is to combine the statistics gathered in both real and randomized training, yielding a composite cost function that may be used to evaluate individual solutions during the course of GA optimization (Dr. Andrew Smellie, personal communication). It is also known that the use of GA can sometimes produce solutions containing non-essential descriptors hidden within a subset of useful descriptors.46 A useful means to eliminate these irrelevant descriptors from the GA selection is through a hybridization operator, which periodically examines the entire population and discards the non-contributing descriptors using a backward elimination procedure.80 This idea originated from the observation that forward selection in the GA-selected subset can greatly reduce the number of irrelevant inputs.182

It has been demonstrated that ANN often produces superior QSAR models compared to models derived by the more traditional approach of multiple linear regression. The key strength of the neural network is that, with the presence of hidden layers, neural networks are able to perform non-linear mapping of the molecular descriptors to the biological activities of the compounds. The quality of the fit to the training data can be particularly impressive for networks that have many adjustable weights. Under such circumstances, the neural network simply memorizes the entire data set and behaves effectively as a look-up table. Thus, it is doubtful that the network would be able to extract a relevant correlation of the input patterns and give a meaningful assessment of other unknown examples. This phenomenon is known as overfitting of data, where a neural network may reproduce the training data set almost perfectly but erroneous predictions will be realized on other unseen test objects. It is fair to point out that the purpose of QSAR is to understand the forces governing the activity of a particular class of compounds, and to assist drug design, and that a look-up table will therefore not aid medicinal chemists in the design of new drugs. What is needed is a system that is able to provide reasonable predictions for the compounds which are previously unknown. So, the use of ANN with an excessive number of network parameters should be avoided. There are two advantages of adopting networks with relatively few processing nodes: First, the efficiency of each node increases and, consequently, the time of the computer simulation is reduced significantly. Second, and probably more importantly, the network can generalize the input patterns better, and this often results in superior predictive power. However, caution is again needed to ensure that the network is not overconstrained. Since a neural network with too few free parameters may not be able to learn the relevant relationships in the data. Such an analysis will collapse during training and again no reliable predictions can be sought. Thus, it is important to find an optimal network topology to deliver a balance between these two extreme situations.

While the numbers of nodes in the input and output layers in a neural network are typically pre-determined by the nature of the data set, the users can control the number of hidden units—and subsequently the number of adjustable weights—in the network. It has been suggested that a parameter, r, can help to determine an optimal setting for the number of hidden units.86 The definition of r is the ratio of the number of data points in the training set to the number of adjustable network parameters. The number of network variables is simply the sum of the number of connections and the number of biases in the network. A three-layered back-propagation network with I input units, H hidden units and O output units will have H × (I + O) connections and H + O biases. The total number of adjustable parameters is therefore H × (I + O) + H + O. The range 1.8 < r < 2.2 has been suggested by Andrea and Kalayeh as a guideline for acceptable r values.86 It is claimed that for r << 1, the network will simply memorize the training patterns; for r>> 3, the network will have difficulty generalizing from the data. The concept of the r ratio has made a significant impact upon the design of neural network architecture in many subsequent QSAR studies.85 It is now possible to make a reasonable initial choice for the number of hidden nodes. Nevertheless, the suggested range of 1.8 < r < 2.2 is perhaps empirical, and is also expected to be case-dependent. For example, some redundancies may already exist in the training patterns, so that the effective number of data points is in fact smaller than anticipated. On a related note, there is another rough guideline that allows the user to choose the number of hidden nodes independently from the number of data points. It is the so-called geometric pyramid rule, which states that the number of nodes in each layer follows a pyramid shape, decreasing progressively from input layer to output layer in a geometric ratio.60 That is to say, a good starting estimate of the number of hidden nodes will be the geometric mean of the numbers of input and output nodes in the network.